How to do this Growth Hack and what can it do for you?

You are familiar with this page: you click on a link, but instead of getting the desired site, an error is displayed, indicating that the requested page is not available.

Something like “404 Not Found,” this message indicates that the page was not found and search engines will punish you if you don't make the change quickly.

What are the expected results of this Growth Hack?

- Type of marketing tactic: Free SEO analysis of your site

- Expected results: Greater responsiveness to repair 404 errors on your website.

Why use this Growth Hack?

Maybe like me, you don't like to wait for Google Search Console to alert you of 404 errors on your website.

This gives a bad signal to Google on your website and let's say that I don't like to make a bad impression in the eyes of search engines.

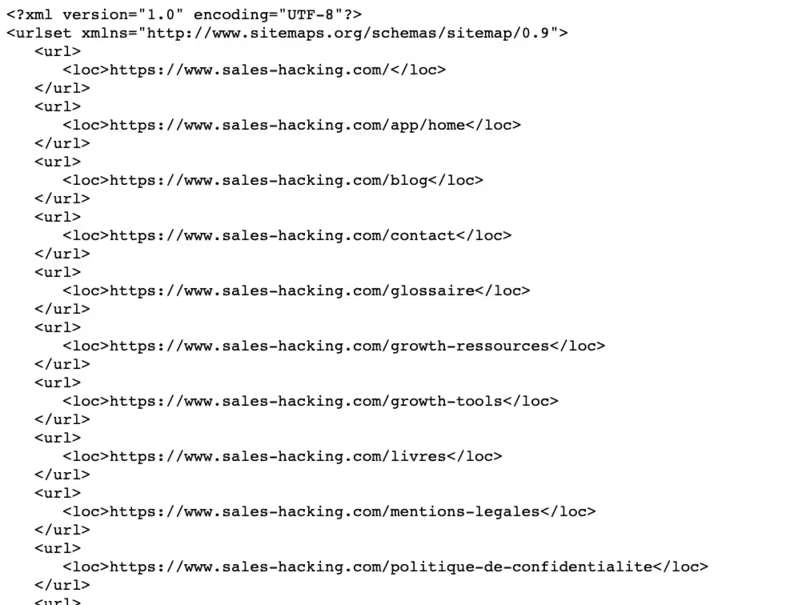

To analyze the URLs of your website, Google will look at the web addresses of your sitemap:

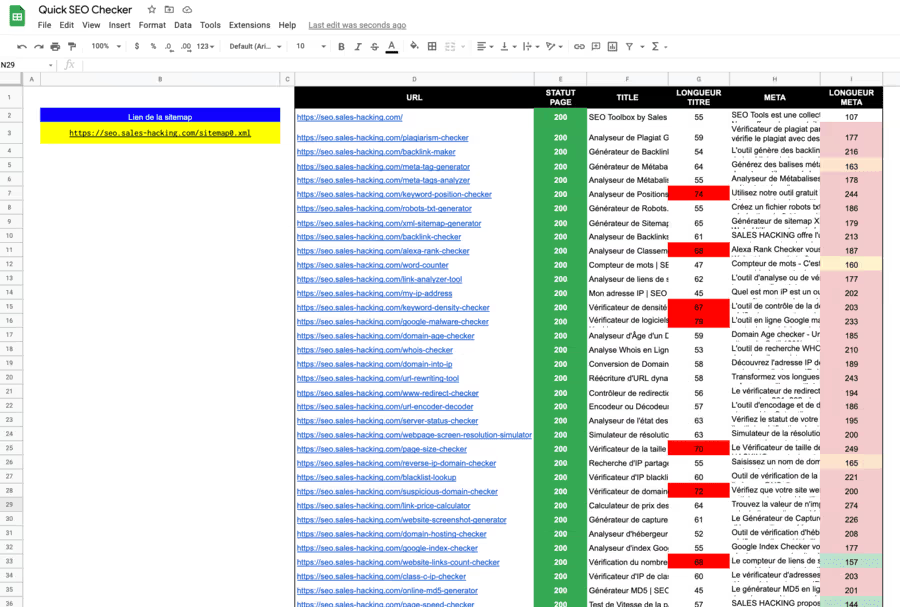

To quickly see if the pages in your sitemap are not in error, we will go through the web pages ourselves with Google Sheet to determine which ones are in error or not.

How do you do this Growth Hack?

The first step will be to enter the address of your sitemap in the Google Spreadsheet file.

.png)

DOWNLOAD: 👉 Google SpreadSheet file

If you download the file, all you have to do is fill in this step but I will still explain the features below.

Importing all URLs into the sitemap.xml file

=IMPORTXML (” https://www.siteweb.com/sitemap.xml “,”//* [local-name () ='url'] /* [local-name () ='loc']“)

This code retrieves all URLs from the sitemap file.

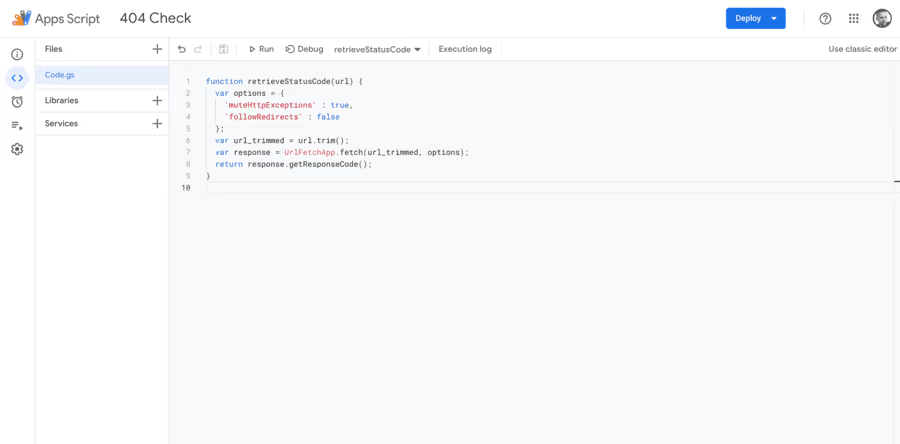

Retrieving page status with Google Apps Script

In the toolbar, go to the Tools menu and click on Extensions -> Apps Scripts

This will open a new page where you can write your custom function, which is as follows:

function retrieveStatusCode (url) {

var options = {

'muteHttpExceptions': true,

'followRedirects': false

};

var url_trimmed = url.trim ();

var response = UrlFetchApp.fetch (url_trimmed, options);

return response.getResponseCode ();

}

The purpose of this function is to take a single URL and return the Http status code returned when connecting to that address.

Therefore, I'm going to pass a single parameter to the function: each of the sitemap URLs.

Then, I create a variable called response and use the fetch method of the URLFetchApp class to make a request to retrieve the URL that will return the HTTP response to me.

I then use the getResponseCode () method to get the response code: a code that indicates a good link is 200.

The famous 404 means that the page is not found/removed, and 3xx means a page redirect.

So using XML IMPORT and this piece of code, I am in a position to verify in real time each of the addresses of a website even if web pages are added or deleted.

Here are then 2 quick functions to check all the titles and meta descriptions of your pages using the function again IMPORT.XML().

Get the page title from the URL

=IMPORTXML (” https://www.example.com/sample-url “, “//title/text ()”)

This code allows you to get the title of the page, the title of a page is placed inside the tag <title>.

Get the meta description of the page from the URL

=IMPORTXML (” https://www.example.com/sample-url “, “//meta [@name ='description']/@content “, “, “//meta [='description']/“)

This code allows you to get the meta description of the page.

The meta description is placed in the tag <meta name="description” content="sample content”>.

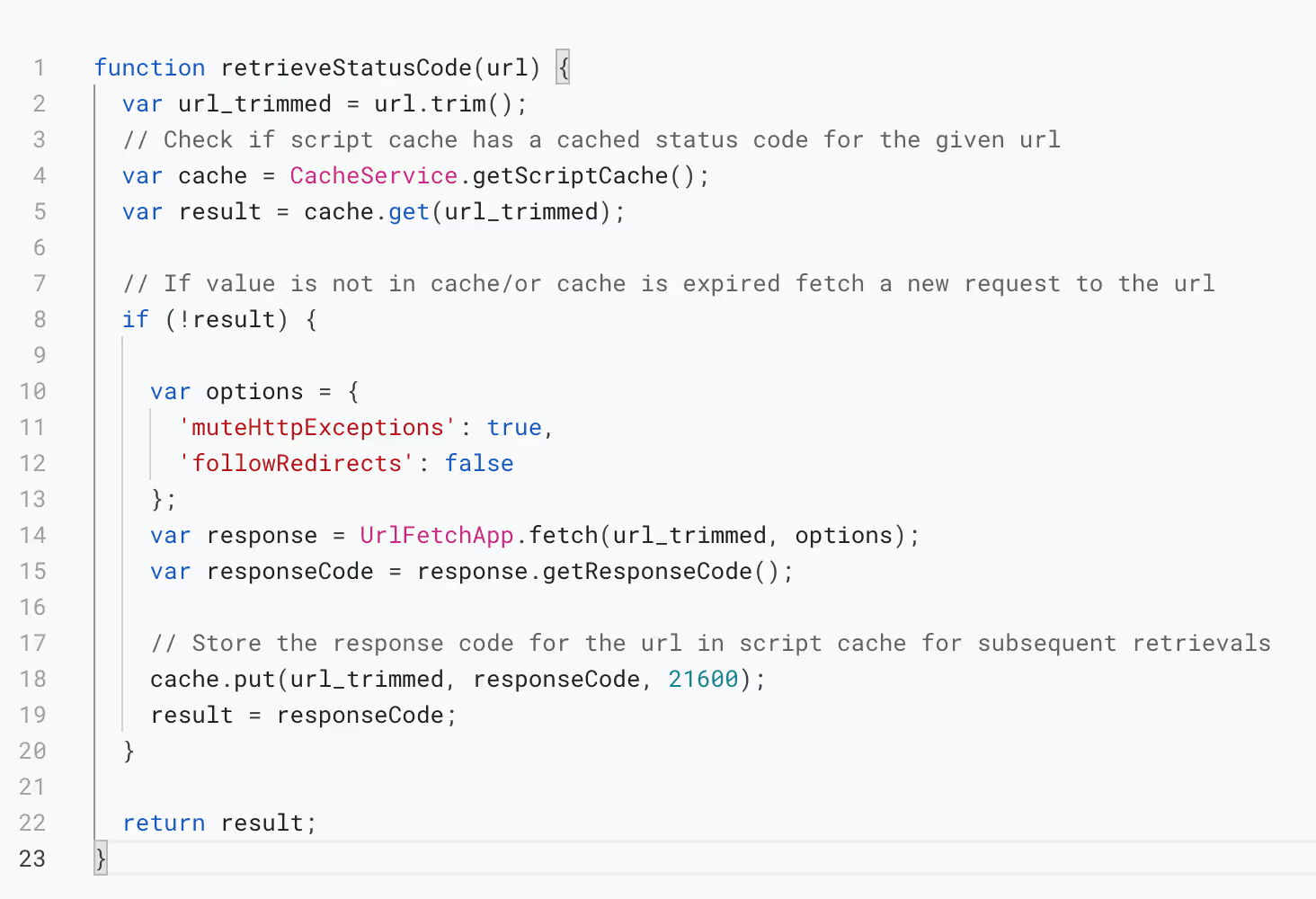

BONUS: Avoid exceeding Google's quotas

Google allows us a quota each day to make queries.

To solve this problem, we can use an object CacheService which creates a cache (local storage) linked to our script.

What is different from the first code we did in Google Apps Scripts?

We created a cache that will contain all the URL/responseCode pairs that we stored during previous runs.

If this is the first time the script sees this url, the result will be undefined and the script will execute the code.

Ok but for the URLs that the script has already gone through?

When you first open the spreadsheet one day, it will execute all queries from scratch for each url you've defined.

However, for the next 6 hours, regardless of how many times you refresh the page or the number of users viewing it, the script retrieves the results from the cache instead of asking for them again and again, saving time and avoiding the quota limit.

You can always add new urls to the sheet and they will search for new queries at first and then the results will be stored in the same cache.

And that's it! You can use these few lines of simple javascript code to exploit Google application scripts and run them on hundreds or thousands of URLs in a spreadsheet without having to manually click on each link.

.svg)